from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split #학습용과 테스트용 분리

from sklearn.metrics import mean_absolute_error #정규화

#딥러닝 모형화를 위해 keras를 사용

from keras.models import Sequential #Sequential 한층씩 추가하여 네트워크를 만든다.

from keras.layers import Dense #Dense 층 간 노드들은 모두 연결되는 모형구조를 만든다.

import seaborn as sns

import matplotlib.pyplot as plt딥러닝: 회귀분석

딥러닝

회귀분석

ref

보스톤 주택가격 예측

- 보스톤 주택가격 데이터 세트와 모형 구조

비선형 회귀분석의 경우 인공신경망 구조는 1개 이상의 은닉충 필요

주택가격(mediv)을 출력노드, 주택가격에 영향을 미치는 나머지 독립변수는 입력노드

0과 1사이의 값을 가지는 더미 변수 chas는 제외

- 활성화함수

- 딥러닝의 경우 ReLU 함수 설정하지만 회귀분석의 경우 출력 노드에는 설정 하지 않아도 되고 \(a(x)=x\)와 같이 선형함수가 활성화함수가 됨

- 비용함수

- \(MSE=\dfrac{1}{n}\sum_{i=1}^n (y_i - \widehat y_i)^2\)

- 최적화 기법

- Adam 사용

- 테스트 및 평가

- 평균절대오차 \(MAE=\dfrac{1}{n}\sum_{i=1}^n |y_i - \widehat y_i|^2\)

1. 패키지 설정

sklearn에서 2.1 이후로 해당 데이터는 사용할 수 없다.

from sklearn.datasets import load_bostonIn this special case, you can fetch the dataset from the original source::

import pandas as pd

import numpy as np

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]import pandas as pd

import numpy as np

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22)

data = raw_df.values[:, :-1]

target = raw_df.values[:, -1]import pandas as pd

import numpy as np

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=21, header=None)

# 데이터셋에서 14번째 변수(MEDV)는 target 변수로 사용

# 데이터와 target을 분리

data2 = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :3]])

feautre_names=['CRIM','ZN','INDUS','CHAS','NOX','RM','AGE','DIS','RAD','TAX','PTRATIO','B','LSTAT']

# 데이터셋의 크기를 확인

print(data2.shape) # (506, 14)(506, 14)data=data2[:,:-1]

target=data2[:,-1]

print(data.shape) # (506, 13)

print(target.shape) # (506,)(506, 13)

(506,)2. 데이터준비

X=pd.DataFrame(data, columns=feautre_names)

print(X) CRIM ZN INDUS CHAS NOX RM AGE DIS RAD TAX \

0 0.00632 18.0 2.31 0.0 0.538 6.575 65.2 4.0900 1.0 296.0

1 0.02731 0.0 7.07 0.0 0.469 6.421 78.9 4.9671 2.0 242.0

2 0.02729 0.0 7.07 0.0 0.469 7.185 61.1 4.9671 2.0 242.0

3 0.03237 0.0 2.18 0.0 0.458 6.998 45.8 6.0622 3.0 222.0

4 0.06905 0.0 2.18 0.0 0.458 7.147 54.2 6.0622 3.0 222.0

.. ... ... ... ... ... ... ... ... ... ...

501 0.06263 0.0 11.93 0.0 0.573 6.593 69.1 2.4786 1.0 273.0

502 0.04527 0.0 11.93 0.0 0.573 6.120 76.7 2.2875 1.0 273.0

503 0.06076 0.0 11.93 0.0 0.573 6.976 91.0 2.1675 1.0 273.0

504 0.10959 0.0 11.93 0.0 0.573 6.794 89.3 2.3889 1.0 273.0

505 0.04741 0.0 11.93 0.0 0.573 6.030 80.8 2.5050 1.0 273.0

PTRATIO B LSTAT

0 15.3 396.90 4.98

1 17.8 396.90 9.14

2 17.8 392.83 4.03

3 18.7 394.63 2.94

4 18.7 396.90 5.33

.. ... ... ...

501 21.0 391.99 9.67

502 21.0 396.90 9.08

503 21.0 396.90 5.64

504 21.0 393.45 6.48

505 21.0 396.90 7.88

[506 rows x 13 columns]- 더미 변수 CHAS제외

X=X.drop(['CHAS'],axis=1)

print(X.head()) CRIM ZN INDUS NOX RM AGE DIS RAD TAX PTRATIO \

0 0.00632 18.0 2.31 0.538 6.575 65.2 4.0900 1.0 296.0 15.3

1 0.02731 0.0 7.07 0.469 6.421 78.9 4.9671 2.0 242.0 17.8

2 0.02729 0.0 7.07 0.469 7.185 61.1 4.9671 2.0 242.0 17.8

3 0.03237 0.0 2.18 0.458 6.998 45.8 6.0622 3.0 222.0 18.7

4 0.06905 0.0 2.18 0.458 7.147 54.2 6.0622 3.0 222.0 18.7

B LSTAT

0 396.90 4.98

1 396.90 9.14

2 392.83 4.03

3 394.63 2.94

4 396.90 5.33 X.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 506 entries, 0 to 505

Data columns (total 12 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 CRIM 506 non-null float64

1 ZN 506 non-null float64

2 INDUS 506 non-null float64

3 NOX 506 non-null float64

4 RM 506 non-null float64

5 AGE 506 non-null float64

6 DIS 506 non-null float64

7 RAD 506 non-null float64

8 TAX 506 non-null float64

9 PTRATIO 506 non-null float64

10 B 506 non-null float64

11 LSTAT 506 non-null float64

dtypes: float64(12)

memory usage: 47.6 KBy=pd.DataFrame(target)

print(y) 0

0 24.0

1 21.6

2 34.7

3 33.4

4 36.2

.. ...

501 22.4

502 20.6

503 23.9

504 22.0

505 11.9

[506 rows x 1 columns]3. 탐색적 데이터 분석

boston_df=pd.DataFrame(data=X)

boston_df["MEDIV"]=target

print(boston_df) CRIM ZN INDUS NOX RM AGE DIS RAD TAX PTRATIO \

0 0.00632 18.0 2.31 0.538 6.575 65.2 4.0900 1.0 296.0 15.3

1 0.02731 0.0 7.07 0.469 6.421 78.9 4.9671 2.0 242.0 17.8

2 0.02729 0.0 7.07 0.469 7.185 61.1 4.9671 2.0 242.0 17.8

3 0.03237 0.0 2.18 0.458 6.998 45.8 6.0622 3.0 222.0 18.7

4 0.06905 0.0 2.18 0.458 7.147 54.2 6.0622 3.0 222.0 18.7

.. ... ... ... ... ... ... ... ... ... ...

501 0.06263 0.0 11.93 0.573 6.593 69.1 2.4786 1.0 273.0 21.0

502 0.04527 0.0 11.93 0.573 6.120 76.7 2.2875 1.0 273.0 21.0

503 0.06076 0.0 11.93 0.573 6.976 91.0 2.1675 1.0 273.0 21.0

504 0.10959 0.0 11.93 0.573 6.794 89.3 2.3889 1.0 273.0 21.0

505 0.04741 0.0 11.93 0.573 6.030 80.8 2.5050 1.0 273.0 21.0

B LSTAT MEDIV

0 396.90 4.98 24.0

1 396.90 9.14 21.6

2 392.83 4.03 34.7

3 394.63 2.94 33.4

4 396.90 5.33 36.2

.. ... ... ...

501 391.99 9.67 22.4

502 396.90 9.08 20.6

503 396.90 5.64 23.9

504 393.45 6.48 22.0

505 396.90 7.88 11.9

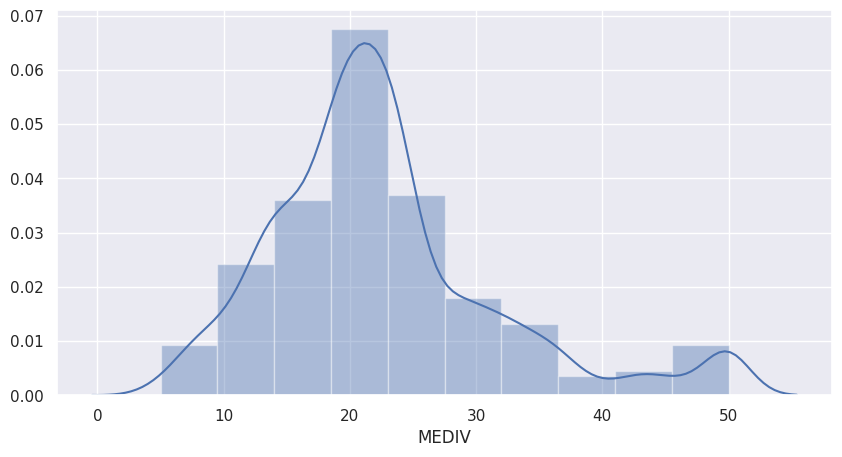

[506 rows x 13 columns]- 히스토그램

#목표변수 값 히스토그램

sns.set(rc={'figure.figsize':(10,5)})

sns.distplot(boston_df['MEDIV'],bins=10)

plt.show()

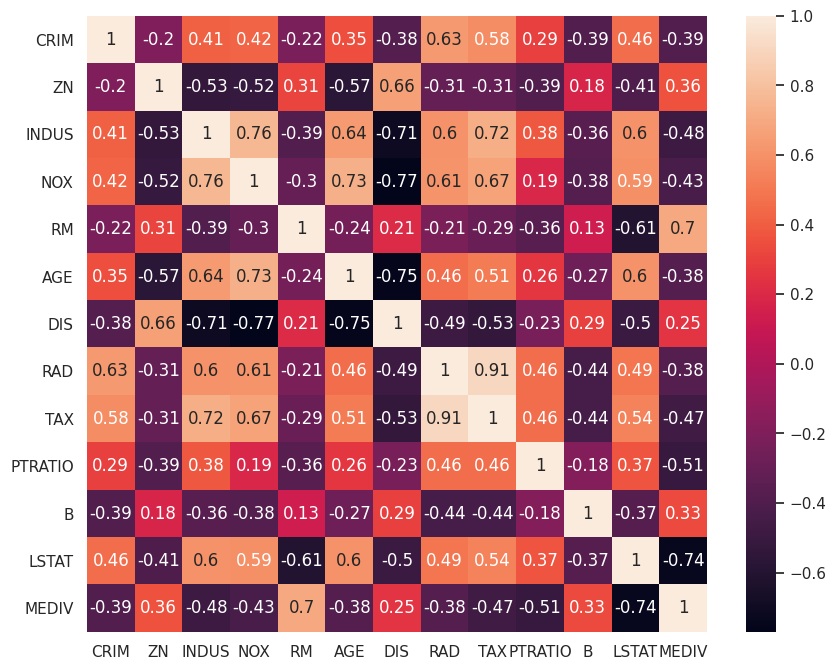

- 상관계수

# 각 변수별 상관계수

correlation_matrix=boston_df.corr().round(2)

correlation_matrix| CRIM | ZN | INDUS | NOX | RM | AGE | DIS | RAD | TAX | PTRATIO | B | LSTAT | MEDIV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CRIM | 1.00 | -0.20 | 0.41 | 0.42 | -0.22 | 0.35 | -0.38 | 0.63 | 0.58 | 0.29 | -0.39 | 0.46 | -0.39 |

| ZN | -0.20 | 1.00 | -0.53 | -0.52 | 0.31 | -0.57 | 0.66 | -0.31 | -0.31 | -0.39 | 0.18 | -0.41 | 0.36 |

| INDUS | 0.41 | -0.53 | 1.00 | 0.76 | -0.39 | 0.64 | -0.71 | 0.60 | 0.72 | 0.38 | -0.36 | 0.60 | -0.48 |

| NOX | 0.42 | -0.52 | 0.76 | 1.00 | -0.30 | 0.73 | -0.77 | 0.61 | 0.67 | 0.19 | -0.38 | 0.59 | -0.43 |

| RM | -0.22 | 0.31 | -0.39 | -0.30 | 1.00 | -0.24 | 0.21 | -0.21 | -0.29 | -0.36 | 0.13 | -0.61 | 0.70 |

| AGE | 0.35 | -0.57 | 0.64 | 0.73 | -0.24 | 1.00 | -0.75 | 0.46 | 0.51 | 0.26 | -0.27 | 0.60 | -0.38 |

| DIS | -0.38 | 0.66 | -0.71 | -0.77 | 0.21 | -0.75 | 1.00 | -0.49 | -0.53 | -0.23 | 0.29 | -0.50 | 0.25 |

| RAD | 0.63 | -0.31 | 0.60 | 0.61 | -0.21 | 0.46 | -0.49 | 1.00 | 0.91 | 0.46 | -0.44 | 0.49 | -0.38 |

| TAX | 0.58 | -0.31 | 0.72 | 0.67 | -0.29 | 0.51 | -0.53 | 0.91 | 1.00 | 0.46 | -0.44 | 0.54 | -0.47 |

| PTRATIO | 0.29 | -0.39 | 0.38 | 0.19 | -0.36 | 0.26 | -0.23 | 0.46 | 0.46 | 1.00 | -0.18 | 0.37 | -0.51 |

| B | -0.39 | 0.18 | -0.36 | -0.38 | 0.13 | -0.27 | 0.29 | -0.44 | -0.44 | -0.18 | 1.00 | -0.37 | 0.33 |

| LSTAT | 0.46 | -0.41 | 0.60 | 0.59 | -0.61 | 0.60 | -0.50 | 0.49 | 0.54 | 0.37 | -0.37 | 1.00 | -0.74 |

| MEDIV | -0.39 | 0.36 | -0.48 | -0.43 | 0.70 | -0.38 | 0.25 | -0.38 | -0.47 | -0.51 | 0.33 | -0.74 | 1.00 |

sns.set(rc={'figure.figsize':(10,8)})

sns.heatmap(data=correlation_matrix,annot=True)

plt.show()

4. 데이터분리

- 학습용과 테스트용 데이터를 7:3으로 분리하자.

X.shape(506, 13)X=X.drop(['MEDIV'],axis=1)

X| CRIM | ZN | INDUS | NOX | RM | AGE | DIS | RAD | TAX | PTRATIO | B | LSTAT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0.538 | 6.575 | 65.2 | 4.0900 | 1.0 | 296.0 | 15.3 | 396.90 | 4.98 |

| 1 | 0.02731 | 0.0 | 7.07 | 0.469 | 6.421 | 78.9 | 4.9671 | 2.0 | 242.0 | 17.8 | 396.90 | 9.14 |

| 2 | 0.02729 | 0.0 | 7.07 | 0.469 | 7.185 | 61.1 | 4.9671 | 2.0 | 242.0 | 17.8 | 392.83 | 4.03 |

| 3 | 0.03237 | 0.0 | 2.18 | 0.458 | 6.998 | 45.8 | 6.0622 | 3.0 | 222.0 | 18.7 | 394.63 | 2.94 |

| 4 | 0.06905 | 0.0 | 2.18 | 0.458 | 7.147 | 54.2 | 6.0622 | 3.0 | 222.0 | 18.7 | 396.90 | 5.33 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 501 | 0.06263 | 0.0 | 11.93 | 0.573 | 6.593 | 69.1 | 2.4786 | 1.0 | 273.0 | 21.0 | 391.99 | 9.67 |

| 502 | 0.04527 | 0.0 | 11.93 | 0.573 | 6.120 | 76.7 | 2.2875 | 1.0 | 273.0 | 21.0 | 396.90 | 9.08 |

| 503 | 0.06076 | 0.0 | 11.93 | 0.573 | 6.976 | 91.0 | 2.1675 | 1.0 | 273.0 | 21.0 | 396.90 | 5.64 |

| 504 | 0.10959 | 0.0 | 11.93 | 0.573 | 6.794 | 89.3 | 2.3889 | 1.0 | 273.0 | 21.0 | 393.45 | 6.48 |

| 505 | 0.04741 | 0.0 | 11.93 | 0.573 | 6.030 | 80.8 | 2.5050 | 1.0 | 273.0 | 21.0 | 396.90 | 7.88 |

506 rows × 12 columns

X_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.3)5. 피처 스케일링

- 학습용 입력 데이터에 대한 정규화 스케일러 만들고 입력데이터를 0~1로 정규화하기

# 정규화 스케일러 생성

scalerX=MinMaxScaler()

# 정규화 스케일러를 학습용 데이터에 맞춤

scalerX.fit(X_train)

# 정규화 스케일러로 학습용 데이터 변환

X_train_norm=scalerX.transform(X_train)

# 정규화 스케일러로 테스트용 데이터 변환

X_test_norm = scalerX.transform(X_test)print(X_train_norm)[[0.00680253 0.2 0.11962963 ... 0.04255319 0.98184477 0.08724646]

[0.07235853 0. 0.64296296 ... 0.80851064 0.24617984 0.27924423]

[0.1610363 0. 0.64296296 ... 0.80851064 0.93953301 0.7957766 ]

...

[0.11494542 0. 0.64296296 ... 0.80851064 0.95662918 0.44595721]

[0.1717624 0. 0.64296296 ... 0.80851064 0.91456957 0.59071964]

[0.00187693 0. 0.33148148 ... 0.70212766 1. 0.27868852]]X_train_norm.shape(354, 12)print(X_test_norm)[[ 2.05400974e-02 0.00000000e+00 6.97777778e-01 ... 2.23404255e-01

9.81617832e-01 -1.66712976e-03]

[ 1.09008802e-02 0.00000000e+00 7.83333333e-01 ... 9.14893617e-01

6.61758031e-01 4.25951653e-01]

[ 4.66707160e-01 0.00000000e+00 6.42962963e-01 ... 8.08510638e-01

8.29946039e-01 7.05751598e-01]

...

[ 1.61036297e-01 0.00000000e+00 6.42962963e-01 ... 8.08510638e-01

9.65757224e-01 3.09252570e-01]

[ 9.47848868e-04 2.00000000e-01 2.30370370e-01 ... 6.38297872e-01

9.85980130e-01 3.24256738e-01]

[ 4.01371790e-04 2.80000000e-01 5.29629630e-01 ... 5.95744681e-01

9.95234253e-01 1.71714365e-01]]X_test_norm.shape(152, 12)# 정규화 스케일러 생성

scalerY=MinMaxScaler()

# 정규화 스케일러를 학습용 데이터에 맞춤

scalerY.fit(y_train)

# 정규화 스케일러로 학습용 데이터 변환

y_train_norm=scalerY.transform(y_train)

# 정규화 스케일러로 테스트용 데이터 변환

y_test_norm = scalerY.transform(y_test)print(y_train_norm[0:10])[[1. ]

[0.24666667]

[0.11555556]

[0.3 ]

[0.17111111]

[0.30888889]

[0.28444444]

[0.32666667]

[0.33111111]

[0.52444444]]y_train_norm.shape(354, 1)print(y_test_norm[0:10])[[1. ]

[0.23555556]

[0.07777778]

[0.12222222]

[0.53555556]

[0.92666667]

[0.40222222]

[0.58 ]

[0.57333333]

[0.34888889]]y_test_norm.shape(152, 1)6. 모형화 및 학습

model=Sequential() # 순차모형

model.add(Dense(60,activation='relu', input_shape=(12,))) # 제 1은닉충과 입력층

model.add(Dense(60,activation='relu')) # 제 2은닉충

model.add(Dense(30,activation='relu')) # 제 3은닉충

model.add(Dense(1)) # 출력층 (선형 활섬화함수)model.summary()Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_12 (Dense) (None, 60) 780

dense_13 (Dense) (None, 60) 3660

dense_14 (Dense) (None, 30) 1830

dense_15 (Dense) (None, 1) 31

=================================================================

Total params: 6,301

Trainable params: 6,301

Non-trainable params: 0

_________________________________________________________________- Param : 가중치 12x60=720, 편향:60

model.compile(optimizer='adam',

loss='mse',

metrics=['mae'])- 학습

results=model.fit(X_train_norm, y_train_norm,

validation_data=(X_test_norm, y_test_norm),

epochs=200, batch_size=32)Epoch 1/200

12/12 [==============================] - 0s 7ms/step - loss: 0.1031 - mae: 0.2534 - val_loss: 0.0570 - val_mae: 0.1836

Epoch 2/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0472 - mae: 0.1690 - val_loss: 0.0401 - val_mae: 0.1482

Epoch 3/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0326 - mae: 0.1291 - val_loss: 0.0295 - val_mae: 0.1203

Epoch 4/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0244 - mae: 0.1095 - val_loss: 0.0234 - val_mae: 0.1124

Epoch 5/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0224 - mae: 0.1113 - val_loss: 0.0205 - val_mae: 0.0991

Epoch 6/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0176 - mae: 0.0876 - val_loss: 0.0162 - val_mae: 0.0880

Epoch 7/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0144 - mae: 0.0785 - val_loss: 0.0135 - val_mae: 0.0801

Epoch 8/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0123 - mae: 0.0711 - val_loss: 0.0118 - val_mae: 0.0791

Epoch 9/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0110 - mae: 0.0675 - val_loss: 0.0108 - val_mae: 0.0707

Epoch 10/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0100 - mae: 0.0645 - val_loss: 0.0105 - val_mae: 0.0756

Epoch 11/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0097 - mae: 0.0650 - val_loss: 0.0095 - val_mae: 0.0690

Epoch 12/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0089 - mae: 0.0612 - val_loss: 0.0092 - val_mae: 0.0682

Epoch 13/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0082 - mae: 0.0588 - val_loss: 0.0090 - val_mae: 0.0672

Epoch 14/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0080 - mae: 0.0565 - val_loss: 0.0102 - val_mae: 0.0783

Epoch 15/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0089 - mae: 0.0635 - val_loss: 0.0090 - val_mae: 0.0702

Epoch 16/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0121 - mae: 0.0826 - val_loss: 0.0117 - val_mae: 0.0826

Epoch 17/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0088 - mae: 0.0666 - val_loss: 0.0088 - val_mae: 0.0703

Epoch 18/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0069 - mae: 0.0544 - val_loss: 0.0077 - val_mae: 0.0625

Epoch 19/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0067 - mae: 0.0540 - val_loss: 0.0073 - val_mae: 0.0614

Epoch 20/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0062 - mae: 0.0518 - val_loss: 0.0072 - val_mae: 0.0605

Epoch 21/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0063 - mae: 0.0513 - val_loss: 0.0070 - val_mae: 0.0599

Epoch 22/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0059 - mae: 0.0507 - val_loss: 0.0069 - val_mae: 0.0594

Epoch 23/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0064 - mae: 0.0518 - val_loss: 0.0075 - val_mae: 0.0646

Epoch 24/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0061 - mae: 0.0507 - val_loss: 0.0065 - val_mae: 0.0573

Epoch 25/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0057 - mae: 0.0495 - val_loss: 0.0064 - val_mae: 0.0568

Epoch 26/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0055 - mae: 0.0504 - val_loss: 0.0067 - val_mae: 0.0580

Epoch 27/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0058 - mae: 0.0512 - val_loss: 0.0066 - val_mae: 0.0570

Epoch 28/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0056 - mae: 0.0487 - val_loss: 0.0064 - val_mae: 0.0564

Epoch 29/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0052 - mae: 0.0484 - val_loss: 0.0063 - val_mae: 0.0554

Epoch 30/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0049 - mae: 0.0469 - val_loss: 0.0063 - val_mae: 0.0560

Epoch 31/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0049 - mae: 0.0472 - val_loss: 0.0061 - val_mae: 0.0545

Epoch 32/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0045 - mae: 0.0448 - val_loss: 0.0063 - val_mae: 0.0546

Epoch 33/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0045 - mae: 0.0452 - val_loss: 0.0061 - val_mae: 0.0537

Epoch 34/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0044 - mae: 0.0456 - val_loss: 0.0061 - val_mae: 0.0540

Epoch 35/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0045 - mae: 0.0454 - val_loss: 0.0061 - val_mae: 0.0533

Epoch 36/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0041 - mae: 0.0436 - val_loss: 0.0060 - val_mae: 0.0520

Epoch 37/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0043 - mae: 0.0449 - val_loss: 0.0062 - val_mae: 0.0529

Epoch 38/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0041 - mae: 0.0444 - val_loss: 0.0060 - val_mae: 0.0529

Epoch 39/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0040 - mae: 0.0439 - val_loss: 0.0060 - val_mae: 0.0518

Epoch 40/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0046 - mae: 0.0481 - val_loss: 0.0087 - val_mae: 0.0648

Epoch 41/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0046 - mae: 0.0467 - val_loss: 0.0060 - val_mae: 0.0524

Epoch 42/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0041 - mae: 0.0453 - val_loss: 0.0060 - val_mae: 0.0533

Epoch 43/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0043 - mae: 0.0454 - val_loss: 0.0067 - val_mae: 0.0548

Epoch 44/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0045 - mae: 0.0501 - val_loss: 0.0060 - val_mae: 0.0503

Epoch 45/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0039 - mae: 0.0451 - val_loss: 0.0063 - val_mae: 0.0548

Epoch 46/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0038 - mae: 0.0446 - val_loss: 0.0056 - val_mae: 0.0494

Epoch 47/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0035 - mae: 0.0423 - val_loss: 0.0057 - val_mae: 0.0496

Epoch 48/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0037 - mae: 0.0426 - val_loss: 0.0060 - val_mae: 0.0509

Epoch 49/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0036 - mae: 0.0425 - val_loss: 0.0071 - val_mae: 0.0555

Epoch 50/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0092 - mae: 0.0708 - val_loss: 0.0089 - val_mae: 0.0701

Epoch 51/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0050 - mae: 0.0526 - val_loss: 0.0069 - val_mae: 0.0579

Epoch 52/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0041 - mae: 0.0456 - val_loss: 0.0060 - val_mae: 0.0510

Epoch 53/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0041 - mae: 0.0462 - val_loss: 0.0062 - val_mae: 0.0528

Epoch 54/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0033 - mae: 0.0409 - val_loss: 0.0063 - val_mae: 0.0516

Epoch 55/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0034 - mae: 0.0414 - val_loss: 0.0064 - val_mae: 0.0529

Epoch 56/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0034 - mae: 0.0414 - val_loss: 0.0064 - val_mae: 0.0545

Epoch 57/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0035 - mae: 0.0401 - val_loss: 0.0062 - val_mae: 0.0504

Epoch 58/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0031 - mae: 0.0387 - val_loss: 0.0065 - val_mae: 0.0525

Epoch 59/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0030 - mae: 0.0385 - val_loss: 0.0068 - val_mae: 0.0541

Epoch 60/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0031 - mae: 0.0404 - val_loss: 0.0061 - val_mae: 0.0506

Epoch 61/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0034 - mae: 0.0425 - val_loss: 0.0071 - val_mae: 0.0564

Epoch 62/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0033 - mae: 0.0405 - val_loss: 0.0070 - val_mae: 0.0545

Epoch 63/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0031 - mae: 0.0395 - val_loss: 0.0068 - val_mae: 0.0534

Epoch 64/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0029 - mae: 0.0399 - val_loss: 0.0064 - val_mae: 0.0527

Epoch 65/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0028 - mae: 0.0382 - val_loss: 0.0062 - val_mae: 0.0517

Epoch 66/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0028 - mae: 0.0379 - val_loss: 0.0063 - val_mae: 0.0512

Epoch 67/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0027 - mae: 0.0379 - val_loss: 0.0062 - val_mae: 0.0505

Epoch 68/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0026 - mae: 0.0365 - val_loss: 0.0063 - val_mae: 0.0513

Epoch 69/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0029 - mae: 0.0383 - val_loss: 0.0066 - val_mae: 0.0514

Epoch 70/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0027 - mae: 0.0370 - val_loss: 0.0066 - val_mae: 0.0505

Epoch 71/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0029 - mae: 0.0386 - val_loss: 0.0066 - val_mae: 0.0520

Epoch 72/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0026 - mae: 0.0373 - val_loss: 0.0067 - val_mae: 0.0515

Epoch 73/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0025 - mae: 0.0367 - val_loss: 0.0063 - val_mae: 0.0509

Epoch 74/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0026 - mae: 0.0364 - val_loss: 0.0064 - val_mae: 0.0519

Epoch 75/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0034 - mae: 0.0422 - val_loss: 0.0060 - val_mae: 0.0496

Epoch 76/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0026 - mae: 0.0353 - val_loss: 0.0068 - val_mae: 0.0534

Epoch 77/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0027 - mae: 0.0381 - val_loss: 0.0064 - val_mae: 0.0499

Epoch 78/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0024 - mae: 0.0357 - val_loss: 0.0060 - val_mae: 0.0495

Epoch 79/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0024 - mae: 0.0353 - val_loss: 0.0059 - val_mae: 0.0490

Epoch 80/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0025 - mae: 0.0366 - val_loss: 0.0065 - val_mae: 0.0517

Epoch 81/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0024 - mae: 0.0363 - val_loss: 0.0064 - val_mae: 0.0507

Epoch 82/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0023 - mae: 0.0350 - val_loss: 0.0062 - val_mae: 0.0499

Epoch 83/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0342 - val_loss: 0.0064 - val_mae: 0.0513

Epoch 84/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0023 - mae: 0.0351 - val_loss: 0.0062 - val_mae: 0.0499

Epoch 85/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0335 - val_loss: 0.0061 - val_mae: 0.0500

Epoch 86/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0346 - val_loss: 0.0062 - val_mae: 0.0517

Epoch 87/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0345 - val_loss: 0.0061 - val_mae: 0.0507

Epoch 88/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0021 - mae: 0.0331 - val_loss: 0.0061 - val_mae: 0.0500

Epoch 89/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0331 - val_loss: 0.0062 - val_mae: 0.0512

Epoch 90/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0021 - mae: 0.0330 - val_loss: 0.0062 - val_mae: 0.0498

Epoch 91/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0327 - val_loss: 0.0062 - val_mae: 0.0496

Epoch 92/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0328 - val_loss: 0.0061 - val_mae: 0.0496

Epoch 93/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0319 - val_loss: 0.0063 - val_mae: 0.0506

Epoch 94/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0329 - val_loss: 0.0062 - val_mae: 0.0506

Epoch 95/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0318 - val_loss: 0.0061 - val_mae: 0.0503

Epoch 96/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0315 - val_loss: 0.0062 - val_mae: 0.0507

Epoch 97/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0317 - val_loss: 0.0061 - val_mae: 0.0501

Epoch 98/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0315 - val_loss: 0.0063 - val_mae: 0.0505

Epoch 99/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0330 - val_loss: 0.0062 - val_mae: 0.0498

Epoch 100/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0313 - val_loss: 0.0067 - val_mae: 0.0527

Epoch 101/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0310 - val_loss: 0.0063 - val_mae: 0.0507

Epoch 102/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0318 - val_loss: 0.0066 - val_mae: 0.0519

Epoch 103/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0021 - mae: 0.0343 - val_loss: 0.0063 - val_mae: 0.0517

Epoch 104/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0317 - val_loss: 0.0063 - val_mae: 0.0508

Epoch 105/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0325 - val_loss: 0.0065 - val_mae: 0.0513

Epoch 106/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0021 - mae: 0.0339 - val_loss: 0.0060 - val_mae: 0.0501

Epoch 107/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0311 - val_loss: 0.0068 - val_mae: 0.0528

Epoch 108/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0026 - mae: 0.0386 - val_loss: 0.0067 - val_mae: 0.0512

Epoch 109/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0335 - val_loss: 0.0070 - val_mae: 0.0530

Epoch 110/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0028 - mae: 0.0404 - val_loss: 0.0065 - val_mae: 0.0517

Epoch 111/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0029 - mae: 0.0407 - val_loss: 0.0058 - val_mae: 0.0501

Epoch 112/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0328 - val_loss: 0.0060 - val_mae: 0.0507

Epoch 113/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0331 - val_loss: 0.0059 - val_mae: 0.0495

Epoch 114/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0021 - mae: 0.0341 - val_loss: 0.0064 - val_mae: 0.0505

Epoch 115/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0324 - val_loss: 0.0065 - val_mae: 0.0518

Epoch 116/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0303 - val_loss: 0.0061 - val_mae: 0.0500

Epoch 117/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0303 - val_loss: 0.0060 - val_mae: 0.0502

Epoch 118/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0320 - val_loss: 0.0062 - val_mae: 0.0509

Epoch 119/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0317 - val_loss: 0.0060 - val_mae: 0.0499

Epoch 120/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0309 - val_loss: 0.0064 - val_mae: 0.0512

Epoch 121/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0024 - mae: 0.0355 - val_loss: 0.0063 - val_mae: 0.0514

Epoch 122/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0024 - mae: 0.0379 - val_loss: 0.0075 - val_mae: 0.0617

Epoch 123/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0028 - mae: 0.0388 - val_loss: 0.0055 - val_mae: 0.0484

Epoch 124/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0020 - mae: 0.0330 - val_loss: 0.0065 - val_mae: 0.0531

Epoch 125/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0325 - val_loss: 0.0063 - val_mae: 0.0504

Epoch 126/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0306 - val_loss: 0.0065 - val_mae: 0.0512

Epoch 127/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0317 - val_loss: 0.0061 - val_mae: 0.0503

Epoch 128/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0292 - val_loss: 0.0060 - val_mae: 0.0502

Epoch 129/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0297 - val_loss: 0.0065 - val_mae: 0.0531

Epoch 130/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0302 - val_loss: 0.0063 - val_mae: 0.0503

Epoch 131/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0293 - val_loss: 0.0066 - val_mae: 0.0525

Epoch 132/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0305 - val_loss: 0.0060 - val_mae: 0.0499

Epoch 133/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0315 - val_loss: 0.0061 - val_mae: 0.0505

Epoch 134/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0299 - val_loss: 0.0066 - val_mae: 0.0515

Epoch 135/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0301 - val_loss: 0.0065 - val_mae: 0.0520

Epoch 136/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0321 - val_loss: 0.0064 - val_mae: 0.0509

Epoch 137/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0021 - mae: 0.0343 - val_loss: 0.0059 - val_mae: 0.0505

Epoch 138/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0303 - val_loss: 0.0058 - val_mae: 0.0489

Epoch 139/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0308 - val_loss: 0.0061 - val_mae: 0.0500

Epoch 140/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0287 - val_loss: 0.0062 - val_mae: 0.0494

Epoch 141/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0285 - val_loss: 0.0062 - val_mae: 0.0496

Epoch 142/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0280 - val_loss: 0.0061 - val_mae: 0.0497

Epoch 143/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0279 - val_loss: 0.0061 - val_mae: 0.0499

Epoch 144/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0300 - val_loss: 0.0061 - val_mae: 0.0492

Epoch 145/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0293 - val_loss: 0.0064 - val_mae: 0.0512

Epoch 146/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0304 - val_loss: 0.0065 - val_mae: 0.0515

Epoch 147/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0024 - mae: 0.0374 - val_loss: 0.0062 - val_mae: 0.0497

Epoch 148/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0316 - val_loss: 0.0061 - val_mae: 0.0515

Epoch 149/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0017 - mae: 0.0305 - val_loss: 0.0062 - val_mae: 0.0523

Epoch 150/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0281 - val_loss: 0.0059 - val_mae: 0.0492

Epoch 151/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0283 - val_loss: 0.0062 - val_mae: 0.0512

Epoch 152/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0289 - val_loss: 0.0062 - val_mae: 0.0501

Epoch 153/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0283 - val_loss: 0.0061 - val_mae: 0.0488

Epoch 154/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0277 - val_loss: 0.0061 - val_mae: 0.0492

Epoch 155/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0272 - val_loss: 0.0063 - val_mae: 0.0489

Epoch 156/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0268 - val_loss: 0.0063 - val_mae: 0.0494

Epoch 157/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0269 - val_loss: 0.0062 - val_mae: 0.0494

Epoch 158/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0012 - mae: 0.0259 - val_loss: 0.0063 - val_mae: 0.0489

Epoch 159/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0260 - val_loss: 0.0062 - val_mae: 0.0500

Epoch 160/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0263 - val_loss: 0.0073 - val_mae: 0.0537

Epoch 161/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0023 - mae: 0.0360 - val_loss: 0.0071 - val_mae: 0.0572

Epoch 162/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0360 - val_loss: 0.0069 - val_mae: 0.0546

Epoch 163/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0342 - val_loss: 0.0066 - val_mae: 0.0527

Epoch 164/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0356 - val_loss: 0.0061 - val_mae: 0.0509

Epoch 165/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0331 - val_loss: 0.0065 - val_mae: 0.0516

Epoch 166/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0019 - mae: 0.0319 - val_loss: 0.0063 - val_mae: 0.0499

Epoch 167/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0325 - val_loss: 0.0077 - val_mae: 0.0570

Epoch 168/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0374 - val_loss: 0.0066 - val_mae: 0.0519

Epoch 169/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0365 - val_loss: 0.0066 - val_mae: 0.0520

Epoch 170/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0023 - mae: 0.0377 - val_loss: 0.0058 - val_mae: 0.0501

Epoch 171/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0311 - val_loss: 0.0061 - val_mae: 0.0517

Epoch 172/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0300 - val_loss: 0.0060 - val_mae: 0.0501

Epoch 173/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0018 - mae: 0.0322 - val_loss: 0.0060 - val_mae: 0.0498

Epoch 174/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0274 - val_loss: 0.0061 - val_mae: 0.0494

Epoch 175/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0269 - val_loss: 0.0063 - val_mae: 0.0495

Epoch 176/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0012 - mae: 0.0263 - val_loss: 0.0061 - val_mae: 0.0495

Epoch 177/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0012 - mae: 0.0258 - val_loss: 0.0060 - val_mae: 0.0501

Epoch 178/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0027 - mae: 0.0401 - val_loss: 0.0057 - val_mae: 0.0493

Epoch 179/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0022 - mae: 0.0354 - val_loss: 0.0061 - val_mae: 0.0501

Epoch 180/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0301 - val_loss: 0.0063 - val_mae: 0.0511

Epoch 181/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0275 - val_loss: 0.0070 - val_mae: 0.0533

Epoch 182/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0277 - val_loss: 0.0059 - val_mae: 0.0485

Epoch 183/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0015 - mae: 0.0289 - val_loss: 0.0061 - val_mae: 0.0484

Epoch 184/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0271 - val_loss: 0.0071 - val_mae: 0.0546

Epoch 185/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0305 - val_loss: 0.0066 - val_mae: 0.0513

Epoch 186/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0270 - val_loss: 0.0061 - val_mae: 0.0483

Epoch 187/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0267 - val_loss: 0.0062 - val_mae: 0.0481

Epoch 188/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0283 - val_loss: 0.0066 - val_mae: 0.0496

Epoch 189/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0261 - val_loss: 0.0060 - val_mae: 0.0476

Epoch 190/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0266 - val_loss: 0.0062 - val_mae: 0.0483

Epoch 191/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0016 - mae: 0.0300 - val_loss: 0.0070 - val_mae: 0.0524

Epoch 192/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0287 - val_loss: 0.0065 - val_mae: 0.0515

Epoch 193/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0283 - val_loss: 0.0062 - val_mae: 0.0481

Epoch 194/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0269 - val_loss: 0.0059 - val_mae: 0.0482

Epoch 195/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0264 - val_loss: 0.0060 - val_mae: 0.0490

Epoch 196/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0012 - mae: 0.0251 - val_loss: 0.0059 - val_mae: 0.0480

Epoch 197/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0012 - mae: 0.0255 - val_loss: 0.0063 - val_mae: 0.0510

Epoch 198/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0013 - mae: 0.0277 - val_loss: 0.0059 - val_mae: 0.0479

Epoch 199/200

12/12 [==============================] - 0s 2ms/step - loss: 0.0012 - mae: 0.0257 - val_loss: 0.0065 - val_mae: 0.0513

Epoch 200/200

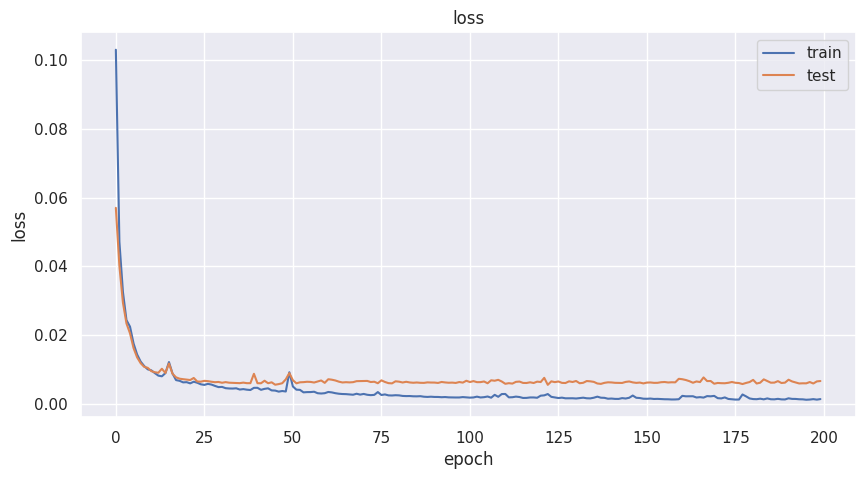

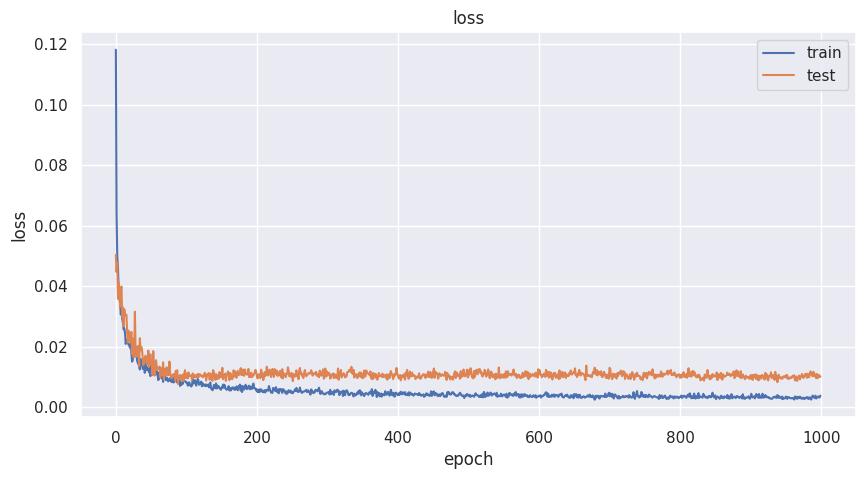

12/12 [==============================] - 0s 2ms/step - loss: 0.0014 - mae: 0.0272 - val_loss: 0.0066 - val_mae: 0.0507print(results.history.keys())dict_keys(['loss', 'mae', 'val_loss', 'val_mae'])loss : 학습 데이터 비용

mae : 학습 데이터 오차

val_loss : 테스트 데이터 비용

val_mae : 테스트 데이터 오차

# 학습 수에 따른 loss 변화

plt.figure(figsize=(10,5))

plt.plot(results.history['loss'])

plt.plot(results.history['val_loss'])

plt.title('loss')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(['train','test'],loc='upper right')

plt.show()

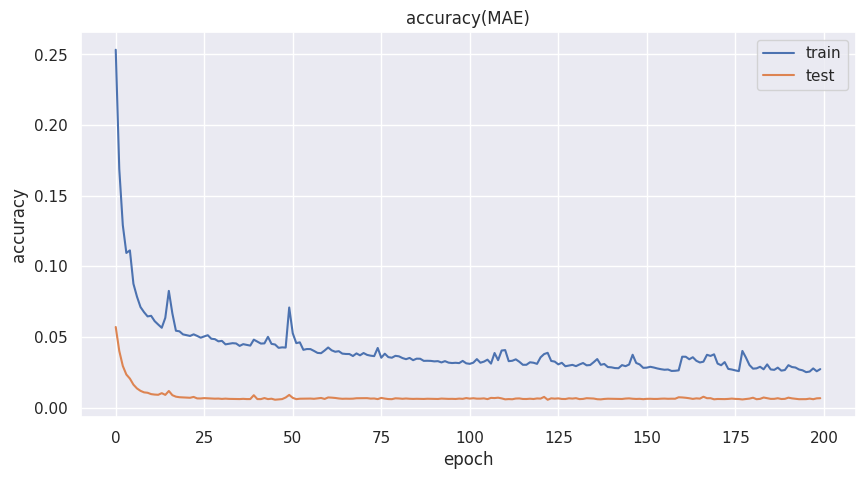

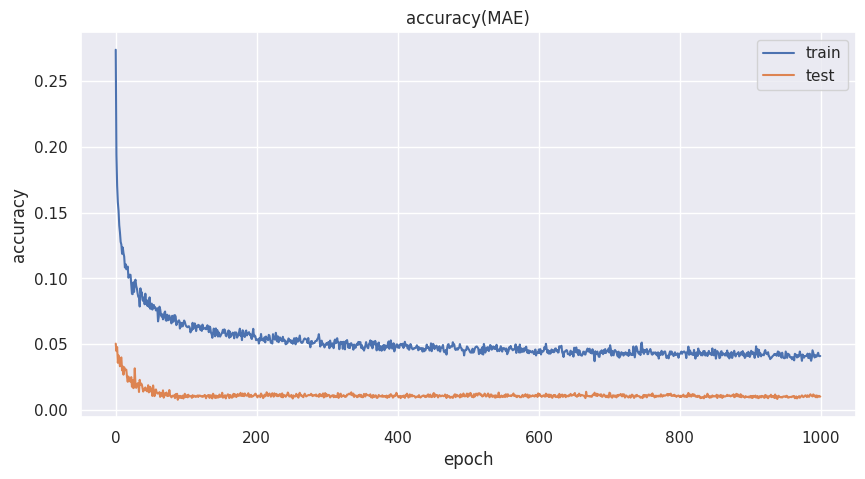

# 학습 수에 따른 정확도(mae) 변화

plt.figure(figsize=(10,5))

plt.plot(results.history['mae'])

plt.plot(results.history['val_loss'])

plt.title('accuracy(MAE)')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.legend(['train','test'],loc='upper right')

plt.show()

7. 예측

# 테스트 데이터 예측

y_pred=model.predict(X_test_norm).flatten()

# 예측 값의 역변환

y_pred_inverse=scalerY.inverse_transform(y_pred.reshape(-1,1))

print(y_pred_inverse[0:10])5/5 [==============================] - 0s 540us/step

[[50.411358 ]

[13.942274 ]

[ 6.1426053]

[10.471625 ]

[30.684546 ]

[44.55355 ]

[26.434183 ]

[31.944752 ]

[32.296173 ]

[23.545477 ]]# 오차측정(MAE)

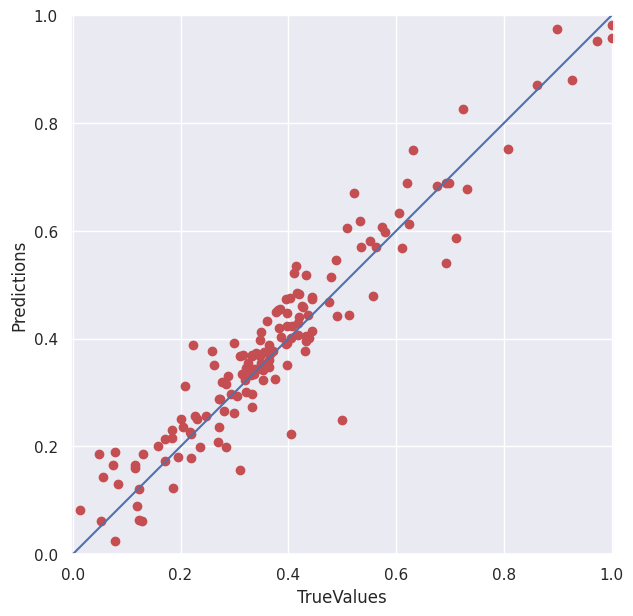

print('MAE:%.2f' %mean_absolute_error(y_test, y_pred_inverse))MAE:2.28# 실제 값 대비 예측 값의 산포도

plt.figure(figsize=(7,7))

plt.scatter(y_test_norm,y_pred,c='r')

plt.xlabel('TrueValues')

plt.ylabel('Predictions')

plt.axis('equal')

plt.xlim(0,1)

plt.ylim(0,1)

plt.plot([0,1],[0,1])

plt.show()

8. 드랍아웃 모형 추가

from keras.layers import Dropoutmodel=Sequential() # 순차모형

model.add(Dense(60,activation='relu', input_shape=(12,))) # 제 1은닉충과 입력층

model.add(Dropout(0.5)) # 제 1은닉충과 2은닉충 사이의 드롭 아웃 50%

model.add(Dense(60,activation='relu')) # 제 2은닉충

model.add(Dense(30,activation='relu')) # 제 3은닉충

model.add(Dropout(0.2)) # 제 3은닉충과 출력층 사이의 드롭 아웃 20%

model.add(Dense(1)) # 출력층 (선형 활섬화함수)model.summary()Model: "sequential_5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_20 (Dense) (None, 60) 780

dropout_2 (Dropout) (None, 60) 0

dense_21 (Dense) (None, 60) 3660

dense_22 (Dense) (None, 30) 1830

dropout_3 (Dropout) (None, 30) 0

dense_23 (Dense) (None, 1) 31

=================================================================

Total params: 6,301

Trainable params: 6,301

Non-trainable params: 0

_________________________________________________________________model.compile(optimizer='adam',

loss='mse',

metrics=['mae'])# results=model.fit(X_train_norm, y_train_norm,

# validation_data=(X_test_norm, y_test_norm),

# epochs=1000, batch_size=20)print(results.history.keys())dict_keys(['loss', 'mae', 'val_loss', 'val_mae'])# 학습 수에 따른 loss 변화

plt.figure(figsize=(10,5))

plt.plot(results.history['loss'])

plt.plot(results.history['val_loss'])

plt.title('loss')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(['train','test'],loc='upper right')

plt.show()

# 학습 수에 따른 정확도(mae) 변화

plt.figure(figsize=(10,5))

plt.plot(results.history['mae'])

plt.plot(results.history['val_loss'])

plt.title('accuracy(MAE)')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.legend(['train','test'],loc='upper right')

plt.show()

# 테스트 데이터 예측

y_pred=model.predict(X_test_norm).flatten()

# 예측 값의 역변환

y_pred_inverse=scalerY.inverse_transform(y_pred.reshape(-1,1))

print(y_pred_inverse[0:10])5/5 [==============================] - 0s 517us/step

[[36.150234]

[15.184836]

[ 8.98333 ]

[12.427422]

[26.98419 ]

[34.612114]

[20.946304]

[27.669504]

[28.082466]

[23.544407]]# 오차측정(MAE)

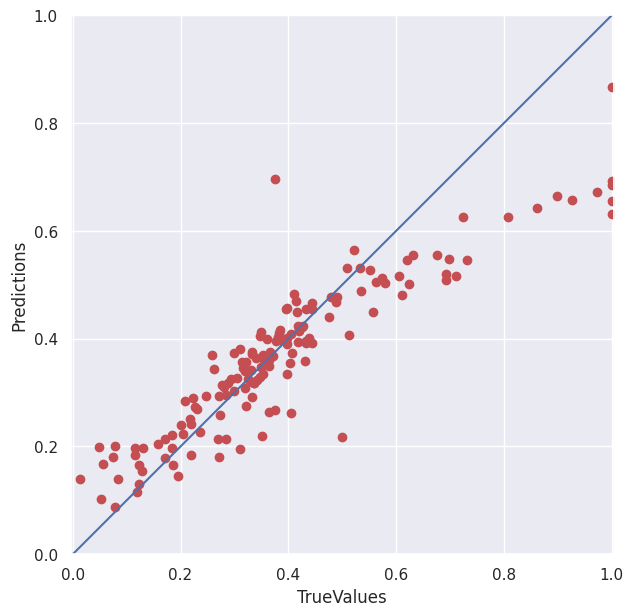

print('MAE:%.2f' %mean_absolute_error(y_test, y_pred_inverse))MAE:3.00# 실제 값 대비 예측 값의 산포도

plt.figure(figsize=(7,7))

plt.scatter(y_test_norm,y_pred,c='r')

plt.xlabel('TrueValues')

plt.ylabel('Predictions')

plt.axis('equal')

plt.xlim(0,1)

plt.ylim(0,1)

plt.plot([0,1],[0,1])

plt.show()

9. 입력 노드의 드랍 아웃

model=Sequential() # 순차모형

model.add(Dropout(0.2, input_shape=(12,))) # 입력 노드에 대한 드랍아웃 비율 0.2

model.add(Dense(60,activation='relu', input_shape=(12,))) # 제 1은닉충과 입력층

model.add(Dense(60,activation='relu')) # 제 2은닉충

model.add(Dense(30,activation='relu')) # 제 3은닉충

model.add(Dense(1)) # 출력층 (선형 활섬화함수)